WORMGPT: A NEW FORM OF CYBERCRIME POWERED BY AI

With the danger environment in today’s digital-first society constantly changing, WormGPT, the newest AI-driven cyber threat, has to be addressed right away. The most recent cybersecurity threat has businesses and security professionals baffled. This technology disobeys rules against data theft, hacking, and other criminal activity. From creating malware and planning phishing assaults to allowing complex cyberattacks that may seriously destroy systems and networks, there is a considerable potential for harm. WormGPT gives cybercriminals the tools they need to carry out illicit operations with ease, endangering the security of innocent people and organizations.

WormGPT’s critical components include the following:

- It is a GPT model substitute that was specifically designed for malevolent purposes.

- The programme makes use of EleutherAI’s open-source GPT-J language paradigm.

- Even inexperienced hackers may conduct large-scale, quick assaults using WormGPT without any technological expertise.

- WormGPT doesn’t prohibit any bad queries since it runs without any moral constraints.

- On a well-known hacker site, the creator of WormGPT is selling access to the programme.

Background:

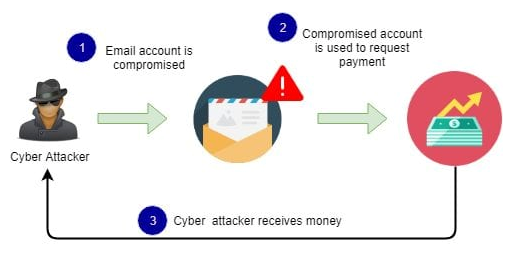

According to its description, WormGTP is “similar to ChatGPT but has no ethical boundaries or limitations.” In an effort to prevent users from misusing the chatbot unethically, ChatGPT has put in place a set of regulations. This includes declining to do actions connected to crime and malware. Users, however, are continuously coming up with workarounds for these restrictions. On darknet forums, WormGPT has been promoted as a tool for adversaries to carry out sophisticated phishing and business email compromise (BEC) attacks.

What are the threats from WORMGPT?

Here are some of the potential threats from the AI tool:

- It is used for cybercrimes likes hacking, data theft and other illegal activities.

- It has made it easy to re-create phishing emails, so it’s important to be cautious when going through your inbox.

- The AI tool has the potential to craft malware to set up phishing attacks.

- It also equips hackers with the means to instigate sophisticated cyberattacks.

- It facilitates cybercriminals in executing illegal activities effortlessly.

How Are Cybercriminals Using WormGPT?

WormGPT is mostly used by malicious actors to produce extremely convincing BEC phishing emails, but it has other applications as well. The company that exposed WormGPT, SlashNext, discovered that WormGPT can also create malware that is written in Python and offer guidance on how to create dangerous assaults. There is a silver lining, though: WormGPT is expensive, which may prevent widespread abuse. Access to the bot is available from the developer for 60 euros per month or 550 euros per year. A buyer noted that the programme is “not worth any dime” and that it has also received criticism for its poor performance.

Mitigation:

- The creation of training programmes to help people prevent Business Email Compromise (BEC) attacks.

- Organizations should improve email verification procedures and message flagging for communications containing keywords like “urgent,” “sensitive,” or “wire transfer” in order to defend against AI-driven BEC attacks.

- Enabling multi-factor authentication for email accounts since this type of login involves more than one piece of information, such as a password and a dynamic pin, code, or biometric.

- Avoid opening emails from unknown senders, and if you do, avoid clicking on any links or opening any files.

- Verify the sender’s request from a separate source and double-check the sender’s email address.