Vulnerabilities in AI-as-a-Service Providers: Privilege Escalation and Cross-Tenant Attacks

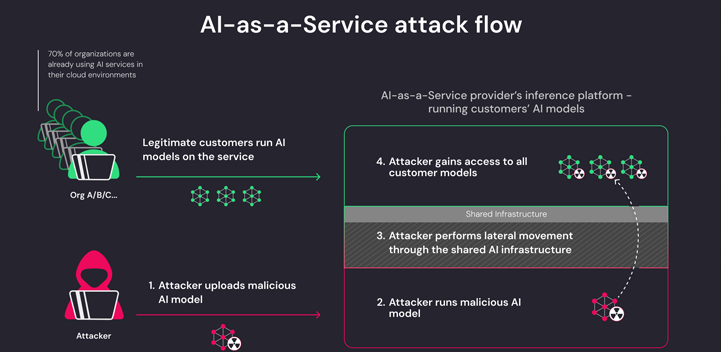

Groundbreaking research has unveiled concerning vulnerabilities within AI-as-a-service providers, notably platforms such as Hugging Face. These vulnerabilities present substantial risks, with the potential to allow threat actors to escalate privileges, infiltrate other customers’ models, and compromise CI/CD pipelines. This technical analysis aims to dissect these risks, comprehensively examining the underlying mechanisms and potential consequences. By shining a light on these vulnerabilities, we seek to provide a deeper understanding of the intricate security challenges faced by AI service providers and their clients. Understanding the complexity of these vulnerabilities is crucial for devising effective mitigation strategies and fortifying defenses against malicious exploitation. As the AI landscape continues to evolve rapidly, it is imperative to stay vigilant and proactive in addressing emerging threats. Through meticulous analysis and strategic action, we can mitigate risks and safeguard the integrity of AI ecosystems for all stakeholders involved.

Understanding the Risks

The vulnerabilities identified originate from two primary avenues: shared Inference infrastructure takeover and shared CI/CD takeover. These vectors provide opportunities for malicious actors to infiltrate AI-as-a-service platforms by uploading malicious models encoded in pickle format. Once uploaded, threat actors exploit container escape techniques, leveraging vulnerabilities within the service’s infrastructure to gain unauthorized access. Shared Inference infrastructure takeover involves compromising the shared infrastructure used for model inference, allowing attackers to execute arbitrary code and potentially gain control over the entire platform. Similarly, shared CI/CD takeover involves exploiting vulnerabilities in the continuous integration and continuous deployment pipelines, enabling threat actors to manipulate the deployment process and inject malicious code. These vectors represent critical weaknesses that could result in severe security breaches, emphasizing the need for robust security measures and proactive risk mitigation strategies within AI-as-a-service providers.

Inference Infrastructure Takeover

Hugging Face permits users to perform inferences on uploaded Pickle-based models using its infrastructure, regardless of their potential danger. This functionality creates a notable security vulnerability, as it enables attackers to design PyTorch models with capabilities for executing arbitrary code. By leveraging misconfiguration in services like Amazon Elastic Kubernetes Service (EKS), threat actors can exploit this vulnerability to attain elevated privileges within the infrastructure. Essentially, this loophole facilitates the execution of potentially malicious code within the platform’s environment, posing a significant risk to its integrity and the security of user data. Such a scenario underscores the critical importance of implementing stringent security measures and diligently addressing vulnerabilities to prevent unauthorized access and potential breaches.

Emerging Threats in AI Security

Hugging Face permits users to perform inferences on uploaded Pickle-based models using its infrastructure, regardless of their potential danger. This functionality creates a notable security vulnerability, as it enables attackers to design PyTorch models with capabilities for executing arbitrary code. By leveraging misconfiguration in services like Amazon Elastic Kubernetes Service (EKS), threat actors can exploit this vulnerability to attain elevated privileges within the infrastructure. Essentially, this loophole facilitates the execution of potentially malicious code within the platform’s environment, posing a significant risk to its integrity and the security of user data. Such a scenario underscores the critical importance of implementing stringent security measures and diligently addressing vulnerabilities to prevent unauthorized access and potential breaches.

Remediation Measures

Following the discovery of these vulnerabilities, Hugging Face has taken proactive steps to enhance its security measures and rectify the identified issues. Despite these measures, users are urged to remain vigilant and uphold security best practices. This includes exercising caution and implementing sand-boxed environments when running untrusted AI models. By prioritizing these precautions, users can mitigate potential risks and ensure the safety and integrity of their AI deployments. It’s crucial for organizations and individuals alike to maintain awareness of evolving security threats and adapt their practices accordingly to safeguard against potential exploits and breaches.

Mitigation Strategies

To address these vulnerabilities, organizations can implement the following remediation steps:

- Enable IMDSv2 with Hop Limit: Prevent pods from accessing the Instance Metadata Service (IMDS) and obtaining unauthorized roles within the cluster.

- Utilize Trusted Models: Employ models only from trusted sources to mitigate the risk of malicious uploads.

- Implement Multi-Factor Authentication (MFA): Enhance authentication mechanisms to prevent unauthorized access to the platform.

- Avoid Pickle Files in Production: Refrain from using pickle files in production environments to mitigate the risk of code execution vulnerabilities.

CI/CD Takeover

Furthermore, the research underscores vulnerabilities within CI/CD pipelines, showcasing how threat actors can exploit specially crafted Docker files to execute code remotely. Through these vulnerabilities, attackers can manipulate internal container registries, posing a significant risk to the overall integrity of the deployment process. This emphasizes the critical importance of implementing robust security measures within CI/CD pipelines to prevent unauthorized access and manipulation. Organizations must remain vigilant and proactive in identifying and addressing vulnerabilities within their deployment pipelines to safeguard against potential breaches. By prioritizing security in the development and deployment of software, organizations can mitigate the risk of exploitation and ensure the reliability and security of their applications.

Additional Remediation Measures

In response to these findings, organizations can implement the following remediation steps:

- Secure CI/CD Pipelines: Implement robust security measures in CI/CD pipelines to prevent unauthorized code execution and manipulation.

- Regular Security Audits: Conduct regular security audits to identify and mitigate vulnerabilities in the infrastructure and application stack.

- Educate Users: Educate users about security best practices, including the risks associated with untrusted AI models and the importance of sand-boxed environments.