Google’s Privacy Sandbox Accused of User Tracking by Austrian Non-Profit

Google’s initiative to phase out third-party tracking cookies in Chrome via the Privacy Sandbox has faced new criticism from the Austrian privacy non-profit noyb (none of your business). Noyb, led by activist Max Schrems, claims that the Privacy Sandbox, though marketed as a privacy improvement, allows tracking within the browser by Google itself. They argue that this internal tracking still requires user consent, which Google allegedly secures through deceptive practices by presenting it as an ad privacy feature. The complaint, filed with the Austrian data protection authority, contends that Google uses dark patterns to increase consent rates, misleading users into believing they are being protected from tracking. Noyb stresses that despite Privacy Sandbox being less invasive than third-party cookies, it doesn’t justify violating data protection laws, as consent must be informed, transparent, and fair. In defense, Google asserts that Privacy Sandbox offers meaningful privacy enhancements over existing technologies and aims to balance stakeholder needs. The Privacy Sandbox proposal aims to block covert tracking techniques and limit third-party data sharing while allowing tailored ads, but its rollout has faced delays due to regulatory and developer concerns. Google plans to phase out third-party cookies for 1% of Chrome users globally by the first quarter of 2024, amid ongoing testing and user options to accept or reject tracking. Noyb’s complaint is part of its broader efforts against big tech, including previous allegations against OpenAI for GDPR violations and criticisms of Meta’s AI data use practices. This controversy underscores the ongoing debate over user consent, data privacy, and ethical ad tracking, highlighting the need for transparent, user-centric privacy solutions in the evolving digital landscape.

Allegations by Noyb

Privacy Sandbox: A Misleading Privacy Improvement?

Noyb, led by activist Max Schrems, argues that although the Privacy Sandbox is marketed as an improvement over invasive third-party tracking, it merely shifts the tracking to be performed by Google within the browser. Noyb asserts that this internal tracking still necessitates the same informed user consent, which Google is allegedly bypassing by presenting it as an “ad privacy feature.” According to Noyb, Google uses deceptive tactics to secure consent, misleading users into thinking they are protected from tracking. This internal tracking by Google, despite being less invasive than third-party cookies, still raises significant privacy concerns and questions about compliance with data protection laws, as genuine consent must be informed, transparent, and fair.

The Consent Issue

Noyb’s complaint, submitted to the Austrian data protection authority, claims that Google is deceiving users into consenting to first-party ad tracking by falsely presenting it as a privacy-enhancing feature. Noyb argues that this practice involves the use of dark patterns to manipulate users into giving consent, thereby misleading them into believing that they are being shielded from ad tracking. Despite Google’s portrayal of the Privacy Sandbox as a safeguard against invasive tracking, noyb contends that the shift to first-party tracking within the browser does not alleviate the need for informed consent. The organization asserts that Google’s approach not only obscures the true nature of the tracking but also potentially violates data protection laws, as valid consent must be informed, transparent, and fair. By disguising the tracking as a privacy feature, Google allegedly exploits user trust and compromises the fundamental principles of user privacy and consent. This tactic raises significant ethical and legal concerns, challenging the legitimacy of the Privacy Sandbox as a genuine improvement in user privacy protection.

Legal Concerns

Noyb further contends that despite the Privacy Sandbox being potentially less invasive than third-party cookie tracking, this does not excuse any breaches of regional data protection laws. Schrems underscores that for consent to be legally valid, it must be informed, transparent, and fair—qualities he asserts are missing from Google’s current approach. He argues that Google’s method of obtaining user consent is flawed, as it obscures the true nature of the tracking being conducted. By presenting the Privacy Sandbox as a privacy enhancement, Google allegedly fails to meet the stringent requirements for legitimate consent, which demand clarity and honesty. Noyb maintains that without these essential elements, the consent obtained cannot be considered lawful, thus undermining the core principles of data protection regulations. This criticism highlights the broader issue of ensuring that new technologies and practices comply with established privacy laws and truly protect user interests.

Google’s Response

In response to the allegations, Google asserts that the Privacy Sandbox delivers “meaningful privacy improvement” compared to existing technologies. The company emphasizes its dedication to achieving a balanced solution that addresses the needs of all stakeholders. Google argues that the Privacy Sandbox offers a significant advancement in user privacy protection while still enabling advertisers to serve relevant ads. They maintain that their approach is a responsible and innovative alternative to third-party cookies, aimed at enhancing user privacy without compromising the functionality of online advertising. Google’s statement reflects its commitment to ongoing collaboration with regulators and developers to refine and improve the Privacy Sandbox, ensuring it meets privacy standards and addresses public concerns.

Background on Privacy Sandbox

Objectives and Delays

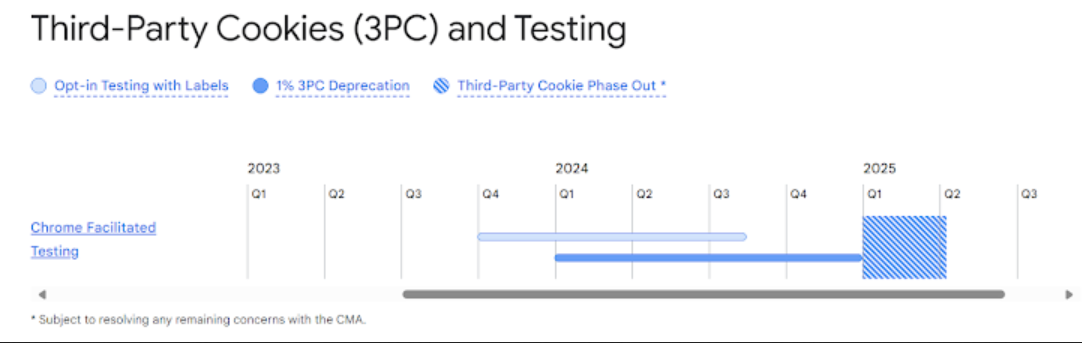

Privacy Sandbox represents Google’s initiative to introduce a series of proposals aimed at blocking covert tracking methods and restricting data sharing with third parties, all while enabling website publishers to continue delivering personalized advertisements. However, the rollout of this initiative, specifically the phase-out of third-party cookies in Chrome, has encountered several setbacks. Google has been actively addressing feedback and concerns from regulators and developers, which has led to multiple delays in its implementation. Despite these challenges, Google has set a target to initiate the phasing out of third-party cookies for 1% of Chrome users globally by the first quarter of 2024. This phased approach is part of Google’s strategy to gradually transition to more privacy-focused technologies while balancing the needs of advertisers and publishers with user privacy concerns. The delays highlight the complexity of developing a privacy-enhancing solution that meets regulatory requirements and industry standards while ensuring the continued functionality of online advertising ecosystems. As Google continues to refine the Privacy Sandbox proposals, it remains committed to fostering transparency and addressing the evolving landscape of digital privacy.

Current Testing Phase

In preparation for the transition to the Privacy Sandbox, Google is intensifying its testing efforts. Users currently have the choice to accept or reject this new form of tracking. However, this approach has faced criticism for potentially misleading consent practices. Critics argue that the way consent is solicited may not be entirely transparent, leading users to inadvertently agree to tracking they do not fully understand. This controversy underscores the broader debate over how consent should be obtained in digital contexts and whether current practices adequately protect user privacy. As Google moves forward with its plans, it must address these concerns to ensure that user consent is both informed and genuine, upholding the principles of transparency and fairness.

Broader Implications and Historical Context

Noyb’s Track Record

Noyb is well-known for lodging complaints against major tech companies over alleged privacy violations. Recently, it accused OpenAI, the developer of ChatGPT, of breaching GDPR regulations by generating false information about individuals. In addition, noyb has criticized Meta (formerly Facebook) for its intent to use publicly shared user data to train AI models, arguing that relying on “Legitimate Interests” is inadequately justified. Noyb’s actions reflect a broader mission to hold tech giants accountable for privacy infringements and to ensure that user data is handled lawfully and ethically. By challenging these companies, noyb aims to enforce stricter adherence to data protection laws and to advocate for the rights of individuals in the digital age. This ongoing scrutiny highlights the critical need for transparency and fairness in how user data is utilized, especially as technological advancements continue to evolve.

Industry-Wide Practices

In its defense, Meta stated that its AI models require training on diverse data that reflects the languages, geography, and cultural references of European users. Meta highlighted that other companies, such as Google and OpenAI, also use data from European users to train their AI models. They argued that their approach is more transparent and provides easier controls compared to their industry counterparts. Meta emphasized the necessity of using relevant information to develop effective AI technologies and asserted that their practices are in line with industry standards. By being open about their data usage and offering more user-friendly controls, Meta claims to prioritize transparency and user autonomy in the AI development process. This stance underscores Meta’s commitment to creating AI systems that are both advanced and respectful of user privacy, setting a benchmark for ethical AI practices within the industry.

Remediation Step:

- Clearer Consent Mechanism: Ensure user consent mechanisms are straightforward and transparent, avoiding dark patterns.

- Independent Audits: Conduct regular independent audits of the Privacy Sandbox to verify compliance with privacy regulations.

- User Education: Provide comprehensive educational resources for users about how their data is being used and their privacy rights.

- Enhanced Controls: Develop more granular privacy controls within Chrome to allow users to manage their data sharing preferences easily.

- Regulatory Collaboration: Work closely with regulatory bodies to address concerns and ensure Privacy Sandbox adheres to all relevant laws.

- Transparent Communication: Maintain open and honest communication with users and stakeholders about the functionalities and limitations of Privacy Sandbox.

- Continuous Improvement: Regularly update and improve Privacy Sandbox based on user feedback and evolving privacy standards.